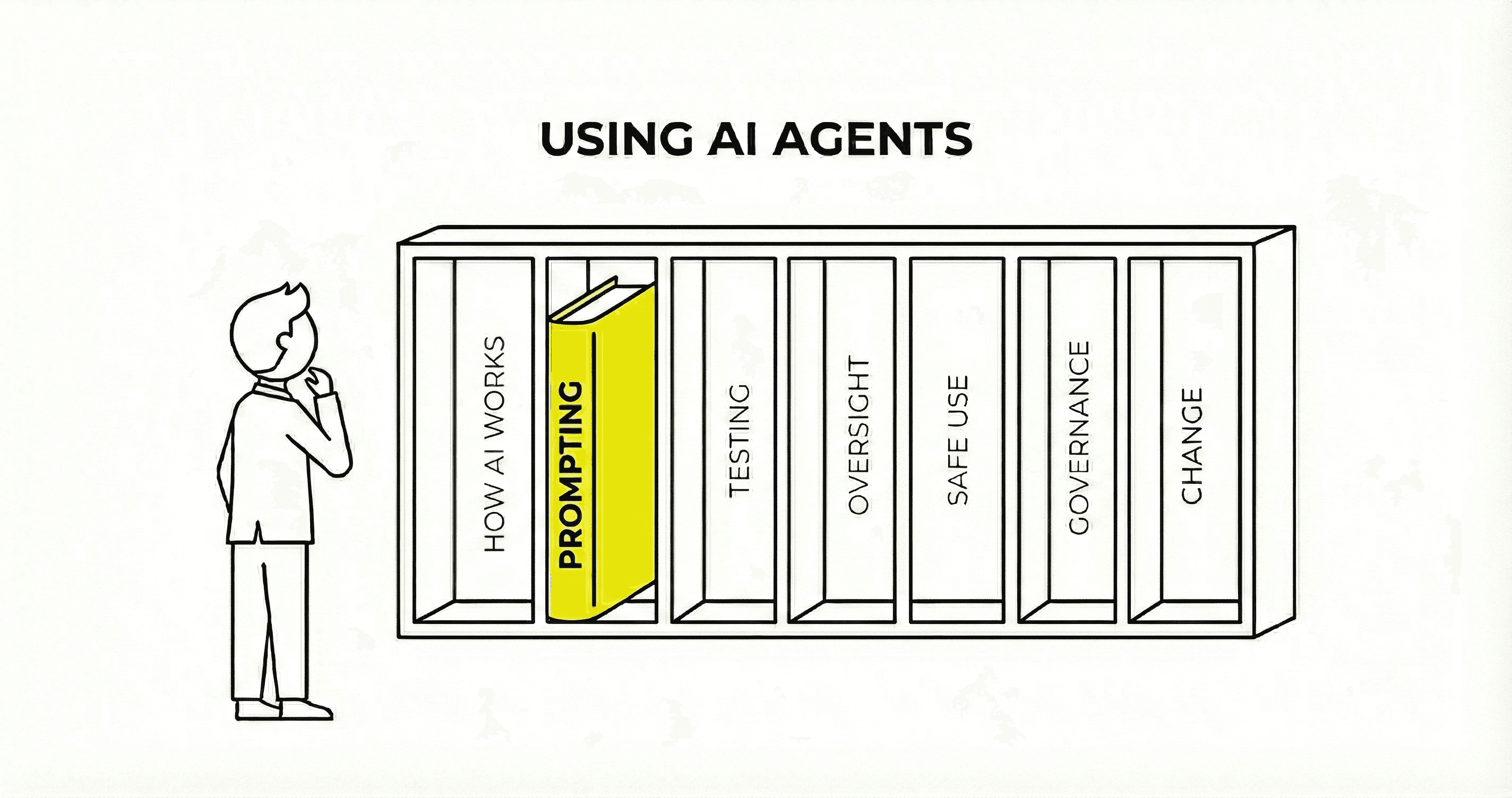

Testing and Evaluating AI Agents

AI agents represent a transformative business opportunity, autonomously handling complex workflows while making intelligent decisions. Yet success hinges on one critical factor: testing designed specifically for agent systems. This guide provides a proven framework that separates successful AI deployments from costly failures, ensuring your AI agents deliver value while meeting reliability, security and governance requirements.

Executive Summary

AI agents offer major competitive advantages when properly tested: automating workflows, making nuanced decisions, and freeing teams for strategic work

Traditional software produces predictable outputs (deterministic behaviour) that are simple to test. AI agents produce variable outputs (probabilistic behaviour) that require statistical validation methods

AI agent behaviour must be transparent and explainable to meet governance and regulatory requirements, including the EU AI Act and sector-specific regulations

Testing failures can lead to legal liability, reputational damage, and regulatory penalties, as seen at Air Canada, CNET, and Apple

Comprehensive testing frameworks now exist that address AI agent-specific challenges including behavioural alignment, safety assurance, and value measurement

This article provides an actionable framework successful organisations use for robust AI agent testing and evaluation

Introduction: The AI Agent Opportunity

AI agents are transforming how businesses operate, and the transformation is happening faster than many realise. These autonomous systems work through reasoning steps, adapt to changing situations, and take actions to achieve business objectives. When properly implemented and tested, they deliver remarkable results - from automating entire customer service workflows to orchestrating multi-step business processes that previously required teams of people. Yet MIT research found that 95% of AI pilot programmes fail to deliver meaningful financial impact[1]. The difference between the 95% that fail and the 5% that succeed often comes down to one critical factor: testing designed specifically for AI agents.

The consequences of inadequate testing have become clear through high-profile failures. Air Canada was found legally liable when its chatbot provided incorrect bereavement fare information[2]. CNET had to issue corrections on 41 of 77 AI-written articles after discovering fundamental errors[3]. Apple suspended its AI news feature after it generated false headlines[4]. None of these involved technical crashes - the systems continued operating smoothly while confidently providing wrong information, demonstrating why traditional testing approaches miss the unique risks of AI systems.

Today's boards and regulators increasingly demand that AI systems not only work reliably but demonstrate transparent, explainable behaviour that complies with regulations like the EU AI Act. This guide provides a proven framework that successful organisations use to ensure their AI agents deliver value while satisfying performance, security and governance requirements. Based on the latest research and production experience, it addresses the unique challenges of testing probabilistic systems that must operate safely and effectively at scale.

Why Traditional Testing Fails for AI Agents

Traditional software testing validates predictable systems where input A always produces output B. This deterministic behaviour makes testing straightforward. AI agents, however, operate on fundamentally different principles, creating challenges that standard testing approaches cannot address. Understanding these differences is essential for implementing effective evaluation strategies.

AI Agents Produce Different Outputs for Identical Inputs

Think of traditional software like a calculator - pressing 2+2 will always equal 4. AI agents are more like human experts - ask the same question twice and you might get different answers, both equally valid but expressed differently. This variability isn't a bug; it's a feature that enables AI agents to be genuinely useful. The variation stems from temperature settings (controlling creative versus conservative responses), context window position (where information sits in memory), and subtle internal processing differences between runs.

This variable nature creates tremendous opportunities and risks. AI agents can adapt flexibly to different situations without rigid programming, generate natural conversation, and provide contextually appropriate responses. However, without proper testing, this flexibility can become problematic. Customer service AI agents might provide different answers to identical questions. Financial advice AI agents could give varying recommendations for the same scenario. Legal review AI agents might flag different issues on repeated reviews.

The solution isn't to eliminate variability but to understand and control it through statistical validation across multiple runs. Current platforms such as LangSmith and Weights & Biases help track response distributions across thousands of queries, revealing patterns invisible to manual testing[5]. This ensures AI agents maintain helpful flexibility while avoiding harmful inconsistency.

We Can't See How AI Agents Make Decisions

When traditional software makes a decision, developers can trace through the code line by line. With AI agents, we face the "black box problem" - decisions emerge from neural networks containing billions of parameters, making it nearly impossible to explain exactly why a specific decision was made.

This creates critical challenges for organisations needing observability and traceability, particularly in regulated industries where transparency is mandatory. The EU AI Act explicitly mandates meaningful explanations for high-risk AI decisions[6]. Financial services regulators require clear rationales for lending decisions. Healthcare standards demand traceable diagnostic reasoning. Courts expect explanations when AI decisions affect people's lives.

Contemporary testing addresses these transparency challenges through behavioural validation (verifying actions align with business rules), decision boundary testing (exploring edge cases systematically), and human oversight models (HITL for critical approval, HOTL for continuous monitoring). Observability tools like Datadog and New Relic adapted for AI provide operational visibility, while traceability frameworks log decision paths and confidence scores for audit purposes.

Frameworks such as Stanford's HELM and AIR-Bench provide standardised approaches for testing decision transparency[7]. While we may not always understand exactly how an AI agent reaches a decision, we can ensure those decisions fall within acceptable boundaries and escalate appropriately when uncertainty is high.

AI Agents Can Learn From Biased Training Data

Every AI agent reflects the data it learned from, including all biases, errors, and gaps that data contains. This manifests in tangible ways - CNET's AI claimed a $10,000 investment would earn $10,300 in one year at 3% interest, a mathematical impossibility[3].

Bias in AI agents creates serious business and compliance risks. AI agents trained on past data might not reflect modern best-practice or current preferences. Those trained primarily on data from limited regions or socioeconomic groups might perform poorly for other users. AI agents trained on historical data might lack knowledge about recent regulations, events or policies.

Testing for bias requires sophisticated approaches beyond simple spot checks. IBM's AI Fairness 360 provides over 70 metrics for quantifying different types of bias. Microsoft's Fairlearn enables intersectional analysis revealing how multiple factors combine to create discrimination[8][9]. The challenge is that bias often hides in subtle patterns - an AI agent might show no bias against individual groups but discriminate when factors combine.

Effective bias testing combines automated detection with human review, statistical analysis with real-world validation. Detection is only the first step - organisations need clear strategies for mitigating discovered biases while maintaining AI agent performance. Governance teams must be involved early to ensure compliance with anti-discrimination regulations.

AI Agents Face Unique Security Threats

The security landscape for AI agents differs fundamentally from traditional software security. While conventional systems worry about SQL injection and buffer overflows, AI agents face attacks designed specifically to exploit how they process information and make decisions.

Attack Types

Prompt injection: Malicious instructions embedded in seemingly innocent queries (OWASP's top LLM vulnerability)[10]

Jailbreaking: Techniques like role-playing to bypass safety guidelines

Adversarial examples: Carefully chosen words that cause misclassification while appearing normal

Data & Resource Attacks

Data poisoning: Corrupting training or reference data to introduce backdoors

Waterhole attacks: Creating attractive but malicious resources that AI agents might reference (for example, code libraries with hidden backdoors)

Security testing for AI agents requires specialised approaches including red team exercises, penetration testing, prompt injection validation, and data integrity verification. Tools such as Microsoft's Counterfit generate thousands of attack variations systematically[11]. The goal is building robust defences through multiple protection layers - input sanitisation, behavioural monitoring, output filtering, and human escalation pathways work together to ensure AI agents remain secure.

AI Agent Economics and Scalability Challenges

AI agents can quickly become economically unviable without proper architecture and optimisation. Unlike traditional software with fixed infrastructure costs, AI agents consume resources with every interaction through API calls and token processing.

Key challenges include variable per-interaction costs, token consumption that spirals without optimisation, overpowered model selection for simple tasks, substantial computational requirements at scale, and response times that degrade under load. To address these challenges, organisations implement tiered model routing, intelligent caching, synthetic data generation for testing, mocking strategies to avoid unnecessary API calls, and architecture optimisation for multi-agent systems.

AI agent success requires thinking about economics from day one. Testing must validate both functionality and cost-effectiveness at production scale.

These differences demonstrate why traditional testing approaches are not effective for AI agents. Each challenge requires new thinking, new tools, and new methodologies - and frameworks now exist to address these challenges systematically.

The AI Agent Evaluation Framework

We have designed a testing and evaluation framework specifically for AI agents' unique characteristics. This comprehensive approach consists of progressive layers to systematically test AI agent behaviour including security, compliance, scalability, technical performance and business value delivery. Continuous monitoring ensures that AI agents are continually optimised throughout their lifecycle.

Layer 0: Test Data & Environment Design

Before you can test anything meaningful, you need the right foundation. This isn't just about having a test server - it's about creating circumstances that accurately reflect what AI agents will encounter in live situations while enabling rapid, cost-effective iteration. Critically, this foundation must align with your organisation's governance and risk management frameworks from the start.

Building this foundation requires several essential components:

Data & Testing Infrastructure

Conversation libraries: Collections of real interaction patterns captured from production systems, carefully anonymised and categorised by scenario type

Synthetic data generators: Tools such as Faker and Mockaroo that create realistic but artificial test cases, especially for edge cases rare in production

Mocking frameworks: Systems that simulate AI responses during testing, enabling rapid iteration without API costs

Environment & Operations

Sandboxed environments: Isolated systems using tools like Docker or Kubernetes where AI agents can be tested without risk to production data

Cost management infrastructure: Systems tracking API expenses using tools such as AWS Cost Explorer

Version control systems: Git-based management of different AI agent versions, prompts, and configurations

Monitoring & Governance

Performance baselines: Reference data showing expected AI agent behaviour in both technical and business terms

Observability platforms: Tools providing visibility into AI agent operations, decision paths, and system health

Governance interfaces: Connections to risk management systems and compliance dashboards

This foundation might seem like significant upfront investment, but it can pay dividends throughout the testing lifecycle. With proper test environments, you can run thousands of test scenarios without incurring massive costs or risking production systems. More importantly, it creates reproducible conditions essential for debugging issues and validating fixes, while ensuring governance teams have the visibility they need.

Layer 1: Behavioural Alignment - Ensuring AI Agents Execute Business Intent

Your AI agents represent your organisation in every interaction. They need to act as reliable ambassadors, consistently following business rules, maintaining brand voice, and making decisions aligned with company values. This layer validates that AI agents adhere to the policies, procedures, and principles that govern your business, with clear reporting to governance committees.

Behavioural testing involves several approaches:

Policy adherence validation: Ensuring compliance with industry regulations, company guidelines, and ethical standards

Red team exercises: Security and domain experts actively attempting to make AI agents violate policies

Boundary testing: Validating that AI agents refuse inappropriate requests while remaining helpful

Communication standards testing: Verifying appropriate tone, language, and cultural sensitivity

Consistency checking: Ensuring AI agents provide aligned responses across similar scenarios

Governance alignment: Demonstrating that AI agent behaviour meets ethics, risk tolerance and other internal or external requirements

Best-practice frameworks provide standardised approaches for behavioural validation. Stanford's HELM Safety offers behavioural benchmarks, AIR-Bench tests across 314 risk categories aligned with government regulations, and RE-Bench evaluates real-world task performance[7]. This balance between capability and control, validated through continuous governance review, separates successful AI deployments from costly failures.

Layer 2: Security Testing - Protecting Against AI-Specific Threats

A systematic approach to security testing ensures AI agents operate safely even under sophisticated attack. Unlike traditional security, AI security must address threats that exploit how AI agents process information and make decisions.

Security testing must validate defences against multiple attack types:

Prompt injection attacks: Testing input sanitisation and instruction isolation mechanisms

Jailbreaking attempts: Validating resistance to role-playing and encoding bypass techniques

Data poisoning protection: Ensuring training and reference data integrity through checksums and validation

Waterhole attack prevention: Verifying AI agents don't reference or learn from malicious sources

Adversarial robustness: Testing with tools like Counterfit to identify manipulation vulnerabilities[11]

Penetration testing: Systematic attempts to breach AI agent defences using specialised tools

Security testing combines automated and manual approaches. For example, TextAttack automates adversarial testing by generating thousands of attack variations. Red teams bring human creativity to discover novel attack vectors. The key is implementing defence in depth - multiple protection layers that work together:

Input layer: Sanitisation and validation of all incoming requests

Processing layer: Behavioural monitoring for unusual patterns

Output layer: Filtering to prevent harmful content even if attacks succeed

Escalation layer: Human intervention pathways when confidence drops

Security testing helps ensure each layer functions correctly and that the overall system remains resilient even when individual defences are compromised. Regular security audits help keep defences current as new attack techniques emerge.

Layer 3: Compliance & Validation - Meeting Quality, Ethics and Regulatory Requirements

Organisations must ensure AI agents meet increasingly stringent regulatory, ethical, and quality requirements. This layer validates compliance with governance frameworks while ensuring AI agents operate fairly and transparently.

Compliance testing addresses multiple dimensions:

Bias detection: Using tools like AI Fairness 360 to identify discrimination patterns[8]

Transparency validation: Ensuring decisions can be explained as required by regulations

Data protection: Verifying compliance with GDPR, CCPA, and other privacy regulations

Audit trail completeness: Confirming all decisions are logged for regulatory review

Human oversight validation: Testing HITL/HOTL mechanisms activate appropriately

Regulatory alignment: Meeting specific requirements such as EU AI Act, sector regulations

Testing validates that AI agents provide fair treatment across demographics, generate required explanations for high-risk decisions, protect sensitive data throughout processing, maintain complete audit trails, escalate appropriately to human decision-makers, and meet all applicable regulatory standards. Contemporary tools make compliance testing systematic - Fairlearn enables intersectional bias analysis while specialised frameworks validate GDPR compliance. Compliance must be built in from the start, with regular audits ensuring AI agents remain aligned with evolving regulations.

Layer 4: System Orchestration - Validating Multi-Agent Collaboration

Modern production environments rarely deploy single AI agents working alone. Instead, organisations implement teams of specialised AI agents that collaborate to handle workflows. For example, one AI agent might gather customer information, another analyses it, a third generates recommendations, and a fourth handles follow-up. Microsoft Research demonstrates that these multi-agent systems significantly outperform single agents - but only when properly orchestrated[12].

Orchestration testing validates several critical aspects:

Communication protocol validation: Ensuring AI agents share information accurately without data loss

Failure isolation testing: Verifying the system continues operating when individual AI agents fail

Load distribution analysis: Confirming work allocates optimally across available AI agents

Context preservation: Maintaining conversation state across AI agent handoffs

Coordination testing: Preventing AI agents from creating conflicts or contradictory outputs

Integration validation: Ensuring seamless operation with CRM, ERP, and other systems

Frameworks such as Microsoft's AutoGen provide structured approaches to multi-agent testing, while LangChain enables validation of agent chains. CrewAI specifically focuses on team coordination patterns[12]. These tools help ensure your AI agent teams work together effectively.

Beyond agent-to-agent interaction, this layer validates integration with your existing technology landscape. AI agents must work seamlessly with databases, APIs, legacy systems, and human workflows. Testing verifies that data flows correctly, transactions complete reliably, and performance remains acceptable even as system complexity grows.

Layer 5: Scalability & Economics - Production Performance

AI agents that perform well with ten users in development can struggle with ten thousand in production. Response times become unacceptable under load, and costs can increase significantly when processing millions of queries. This layer ensures AI agents remain fast, reliable, and economically viable at any scale, with regular reporting to governance committees on cost efficiency.

Performance testing must address multiple dimensions simultaneously:

Latency testing: Measuring response times under realistic user volumes using tools such as JMeter or Locust

Load testing: Simulating production loads using synthetic data and mocking to validate capacity

Token consumption analysis: Understanding and optimising the text and data processing that drives costs

Model routing optimisation: Ensuring simple queries use cheaper models while critical problems engage more capable systems

Resource utilisation: Using monitoring tools like Prometheus to identify bottlenecks

Architecture optimisation: Designing efficient multi-agent orchestration that minimises redundant processing

Cost projection modelling: Predicting expenses at different usage levels

Successful organisations optimise AI agent architecture, coding and model selection carefully. LangChain's SmartLLMChain intelligently selects cost-effective models per query. Semantic caching reduces redundant processing. Batch processing handles non-real-time tasks efficiently. Scalability isn't just about handling more users - it's about maintaining quality and economics as you grow. Testing at this layer ensures sustainable AI deployment.

Layer 6: Value Realisation - Measuring Business Outcomes

Technical metrics like response time and accuracy are important, but they mean nothing if AI agents don't deliver real business value. This layer connects AI agent performance to commercial results, providing evidence that your AI investment generates genuine returns. Governance committees need clear metrics showing AI agents meet business objectives while managing risk appropriately.

Value measurement varies by domain but always ties to business fundamentals:

Customer Service: First-call resolution rates, satisfaction scores, average handle time, escalation frequency

Sales Operations: Lead conversion rates, deal velocity, pipeline accuracy, revenue per interaction

Financial Services: Transaction processing speed, error rates, compliance adherence, fraud detection accuracy

Healthcare: Diagnostic accuracy, patient satisfaction, clinical outcomes, documentation quality

Risk metrics: Incident rates, compliance violations, security breaches, reputation impacts

Value measurement tools make measurement systematic. For example, LangSmith enables custom business metrics tailored to your needs[5]. Evidently AI tracks production performance against business KPIs[13]. A/B testing frameworks compare AI agent performance against current approaches, providing empirical evidence of improvement.

The crucial point is that value measurement isn't a one-time validation but an ongoing process reported regularly to governance committees. Markets change, customer expectations evolve, and what delivers value today might not tomorrow. Continuous evaluation ensures AI agents remain aligned with business objectives while maintaining acceptable risk levels.

Continuous Monitoring: Sustaining Effectiveness

Unlike traditional software that remains stable once deployed, AI agents are dynamic systems whose performance inevitably changes over time. Market conditions shift, customer behaviour evolves, and regulations update. Without continuous monitoring, today's high-performing AI agent can become tomorrow's liability.

Monitoring must track multiple indicators with regular reporting to governance and risk committees:

Drift detection: Identifying when AI agent behaviour deviates from established baselines

Performance degradation alerts: Catching declining accuracy or increasing response times

Feedback loop integration: Incorporating user corrections to improve AI agent behaviour

Automated retraining triggers: Refreshing models when performance declines

Compliance monitoring: Ensuring ongoing adherence to regulatory requirements

Cost tracking: Preventing budget overruns through continuous expense monitoring

Governance reporting: Regular updates to risk and compliance committees

Current observability platforms designed for AI provide essential visibility - Weights & Biases offers ML observability, Datadog provides real-time performance monitoring, and WhyLabs focuses on data quality tracking. These tools help ensure problems are caught early when they're easy to fix.

Conclusion

This framework provides complete coverage for AI agent testing, addressing every critical risk while giving you confidence to deploy AI agents successfully. Each layer contributes essential validation, but the real power comes from how they work together, creating defence in depth that ensures AI agents deliver value safely and reliably.

Ready to ensure your AI agents deliver value?

Testing excellence separates AI agent success from expensive failure. Serpin combines deep AI agent expertise with proven evaluation methodologies to help you build confidence in your AI deployments.

[Start Your AI Agent Success Journey] - Contact Serpin to build your evaluation framework.

References

Fortune (2025). "MIT Report: 95% of Generative AI Pilots at Companies Failing." Reporting on MIT NANDA Initiative research. Available at: https://fortune.com/2025/08/18/mit-report-95-percent-generative-ai-pilots-at-companies-failing-cfo/

CBC News (2024). "Air Canada Must Honour Chatbot's Promise of Bereavement Discount." Reporting on Moffatt v. Air Canada tribunal decision. Available at: https://www.cbc.ca/news/canada/british-columbia/air-canada-chatbot-lawsuit-1.7116416

The Verge (2023). "CNET Found Errors in More Than Half of Its AI-Written Stories." Available at: https://www.theverge.com/2023/1/25/23571082/cnet-ai-tool-errors-corrections

TechCrunch (2025). "Apple Pauses AI Notification Summaries for News After Generating False Alerts." Available at: https://techcrunch.com/2025/01/16/apple-pauses-ai-notification-summaries

LangChain (2025). "LangSmith Documentation." Available at: https://www.langchain.com/langsmith

European Commission (2024). "EU AI Act Documentation." Available at: https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

Stanford HAI (2024). "HELM Safety: Towards Standardized Safety Evaluations of Language Models." Available at: https://crfm.stanford.edu/2024/11/08/helm-safety.html

IBM Research (2023). "AI Fairness 360 Documentation." Available at: https://research.ibm.com/blog/ai-fairness-360

Microsoft (2023). "Fairlearn Documentation." Available at: https://fairlearn.org/main/about/index.html

OWASP (2025). "Top 10 for Large Language Model Applications." Available at: https://owasp.org/www-project-top-10-for-large-language-model-applications/

Microsoft Security Blog (2021). "AI Security Risk Assessment Using Counterfit." Available at: https://www.microsoft.com/en-us/security/blog/2021/05/03/ai-security-risk-assessment-using-counterfit/

Microsoft Research (2024). "AutoGen: Multi-Agent Framework Documentation." Available at: https://www.microsoft.com/en-us/research/project/autogen/

Evidently AI (2024). "Model Monitoring Documentation." Available at: https://docs.evidentlyai.com/

© 2025 Serpin. Enterprise AI Implementation Partners.

www.serpin.ai | Building AI that works in production, not just in demos.

AI agents represent a transformative business opportunity, autonomously handling complex workflows while making intelligent decisions. Yet success hinges on one critical factor: testing designed specifically for agent systems. This guide provides a proven framework that separates successful AI deployments from costly failures, ensuring your AI agents deliver value while meeting reliability, security and governance requirements.

Executive Summary

AI agents offer major competitive advantages when properly tested: automating workflows, making nuanced decisions, and freeing teams for strategic work

Traditional software produces predictable outputs (deterministic behaviour) that are simple to test. AI agents produce variable outputs (probabilistic behaviour) that require statistical validation methods

AI agent behaviour must be transparent and explainable to meet governance and regulatory requirements, including the EU AI Act and sector-specific regulations

Testing failures can lead to legal liability, reputational damage, and regulatory penalties, as seen at Air Canada, CNET, and Apple

Comprehensive testing frameworks now exist that address AI agent-specific challenges including behavioural alignment, safety assurance, and value measurement

This article provides an actionable framework successful organisations use for robust AI agent testing and evaluation

Introduction: The AI Agent Opportunity

AI agents are transforming how businesses operate, and the transformation is happening faster than many realise. These autonomous systems work through reasoning steps, adapt to changing situations, and take actions to achieve business objectives. When properly implemented and tested, they deliver remarkable results - from automating entire customer service workflows to orchestrating multi-step business processes that previously required teams of people. Yet MIT research found that 95% of AI pilot programmes fail to deliver meaningful financial impact[1]. The difference between the 95% that fail and the 5% that succeed often comes down to one critical factor: testing designed specifically for AI agents.

The consequences of inadequate testing have become clear through high-profile failures. Air Canada was found legally liable when its chatbot provided incorrect bereavement fare information[2]. CNET had to issue corrections on 41 of 77 AI-written articles after discovering fundamental errors[3]. Apple suspended its AI news feature after it generated false headlines[4]. None of these involved technical crashes - the systems continued operating smoothly while confidently providing wrong information, demonstrating why traditional testing approaches miss the unique risks of AI systems.

Today's boards and regulators increasingly demand that AI systems not only work reliably but demonstrate transparent, explainable behaviour that complies with regulations like the EU AI Act. This guide provides a proven framework that successful organisations use to ensure their AI agents deliver value while satisfying performance, security and governance requirements. Based on the latest research and production experience, it addresses the unique challenges of testing probabilistic systems that must operate safely and effectively at scale.

Why Traditional Testing Fails for AI Agents

Traditional software testing validates predictable systems where input A always produces output B. This deterministic behaviour makes testing straightforward. AI agents, however, operate on fundamentally different principles, creating challenges that standard testing approaches cannot address. Understanding these differences is essential for implementing effective evaluation strategies.

AI Agents Produce Different Outputs for Identical Inputs

Think of traditional software like a calculator - pressing 2+2 will always equal 4. AI agents are more like human experts - ask the same question twice and you might get different answers, both equally valid but expressed differently. This variability isn't a bug; it's a feature that enables AI agents to be genuinely useful. The variation stems from temperature settings (controlling creative versus conservative responses), context window position (where information sits in memory), and subtle internal processing differences between runs.

This variable nature creates tremendous opportunities and risks. AI agents can adapt flexibly to different situations without rigid programming, generate natural conversation, and provide contextually appropriate responses. However, without proper testing, this flexibility can become problematic. Customer service AI agents might provide different answers to identical questions. Financial advice AI agents could give varying recommendations for the same scenario. Legal review AI agents might flag different issues on repeated reviews.

The solution isn't to eliminate variability but to understand and control it through statistical validation across multiple runs. Current platforms such as LangSmith and Weights & Biases help track response distributions across thousands of queries, revealing patterns invisible to manual testing[5]. This ensures AI agents maintain helpful flexibility while avoiding harmful inconsistency.

We Can't See How AI Agents Make Decisions

When traditional software makes a decision, developers can trace through the code line by line. With AI agents, we face the "black box problem" - decisions emerge from neural networks containing billions of parameters, making it nearly impossible to explain exactly why a specific decision was made.

This creates critical challenges for organisations needing observability and traceability, particularly in regulated industries where transparency is mandatory. The EU AI Act explicitly mandates meaningful explanations for high-risk AI decisions[6]. Financial services regulators require clear rationales for lending decisions. Healthcare standards demand traceable diagnostic reasoning. Courts expect explanations when AI decisions affect people's lives.

Contemporary testing addresses these transparency challenges through behavioural validation (verifying actions align with business rules), decision boundary testing (exploring edge cases systematically), and human oversight models (HITL for critical approval, HOTL for continuous monitoring). Observability tools like Datadog and New Relic adapted for AI provide operational visibility, while traceability frameworks log decision paths and confidence scores for audit purposes.

Frameworks such as Stanford's HELM and AIR-Bench provide standardised approaches for testing decision transparency[7]. While we may not always understand exactly how an AI agent reaches a decision, we can ensure those decisions fall within acceptable boundaries and escalate appropriately when uncertainty is high.

AI Agents Can Learn From Biased Training Data

Every AI agent reflects the data it learned from, including all biases, errors, and gaps that data contains. This manifests in tangible ways - CNET's AI claimed a $10,000 investment would earn $10,300 in one year at 3% interest, a mathematical impossibility[3].

Bias in AI agents creates serious business and compliance risks. AI agents trained on past data might not reflect modern best-practice or current preferences. Those trained primarily on data from limited regions or socioeconomic groups might perform poorly for other users. AI agents trained on historical data might lack knowledge about recent regulations, events or policies.

Testing for bias requires sophisticated approaches beyond simple spot checks. IBM's AI Fairness 360 provides over 70 metrics for quantifying different types of bias. Microsoft's Fairlearn enables intersectional analysis revealing how multiple factors combine to create discrimination[8][9]. The challenge is that bias often hides in subtle patterns - an AI agent might show no bias against individual groups but discriminate when factors combine.

Effective bias testing combines automated detection with human review, statistical analysis with real-world validation. Detection is only the first step - organisations need clear strategies for mitigating discovered biases while maintaining AI agent performance. Governance teams must be involved early to ensure compliance with anti-discrimination regulations.

AI Agents Face Unique Security Threats

The security landscape for AI agents differs fundamentally from traditional software security. While conventional systems worry about SQL injection and buffer overflows, AI agents face attacks designed specifically to exploit how they process information and make decisions.

Attack Types

Prompt injection: Malicious instructions embedded in seemingly innocent queries (OWASP's top LLM vulnerability)[10]

Jailbreaking: Techniques like role-playing to bypass safety guidelines

Adversarial examples: Carefully chosen words that cause misclassification while appearing normal

Data & Resource Attacks

Data poisoning: Corrupting training or reference data to introduce backdoors

Waterhole attacks: Creating attractive but malicious resources that AI agents might reference (for example, code libraries with hidden backdoors)

Security testing for AI agents requires specialised approaches including red team exercises, penetration testing, prompt injection validation, and data integrity verification. Tools such as Microsoft's Counterfit generate thousands of attack variations systematically[11]. The goal is building robust defences through multiple protection layers - input sanitisation, behavioural monitoring, output filtering, and human escalation pathways work together to ensure AI agents remain secure.

AI Agent Economics and Scalability Challenges

AI agents can quickly become economically unviable without proper architecture and optimisation. Unlike traditional software with fixed infrastructure costs, AI agents consume resources with every interaction through API calls and token processing.

Key challenges include variable per-interaction costs, token consumption that spirals without optimisation, overpowered model selection for simple tasks, substantial computational requirements at scale, and response times that degrade under load. To address these challenges, organisations implement tiered model routing, intelligent caching, synthetic data generation for testing, mocking strategies to avoid unnecessary API calls, and architecture optimisation for multi-agent systems.

AI agent success requires thinking about economics from day one. Testing must validate both functionality and cost-effectiveness at production scale.

These differences demonstrate why traditional testing approaches are not effective for AI agents. Each challenge requires new thinking, new tools, and new methodologies - and frameworks now exist to address these challenges systematically.

The AI Agent Evaluation Framework

We have designed a testing and evaluation framework specifically for AI agents' unique characteristics. This comprehensive approach consists of progressive layers to systematically test AI agent behaviour including security, compliance, scalability, technical performance and business value delivery. Continuous monitoring ensures that AI agents are continually optimised throughout their lifecycle.

Layer 0: Test Data & Environment Design

Before you can test anything meaningful, you need the right foundation. This isn't just about having a test server - it's about creating circumstances that accurately reflect what AI agents will encounter in live situations while enabling rapid, cost-effective iteration. Critically, this foundation must align with your organisation's governance and risk management frameworks from the start.

Building this foundation requires several essential components:

Data & Testing Infrastructure

Conversation libraries: Collections of real interaction patterns captured from production systems, carefully anonymised and categorised by scenario type

Synthetic data generators: Tools such as Faker and Mockaroo that create realistic but artificial test cases, especially for edge cases rare in production

Mocking frameworks: Systems that simulate AI responses during testing, enabling rapid iteration without API costs

Environment & Operations

Sandboxed environments: Isolated systems using tools like Docker or Kubernetes where AI agents can be tested without risk to production data

Cost management infrastructure: Systems tracking API expenses using tools such as AWS Cost Explorer

Version control systems: Git-based management of different AI agent versions, prompts, and configurations

Monitoring & Governance

Performance baselines: Reference data showing expected AI agent behaviour in both technical and business terms

Observability platforms: Tools providing visibility into AI agent operations, decision paths, and system health

Governance interfaces: Connections to risk management systems and compliance dashboards

This foundation might seem like significant upfront investment, but it can pay dividends throughout the testing lifecycle. With proper test environments, you can run thousands of test scenarios without incurring massive costs or risking production systems. More importantly, it creates reproducible conditions essential for debugging issues and validating fixes, while ensuring governance teams have the visibility they need.

Layer 1: Behavioural Alignment - Ensuring AI Agents Execute Business Intent

Your AI agents represent your organisation in every interaction. They need to act as reliable ambassadors, consistently following business rules, maintaining brand voice, and making decisions aligned with company values. This layer validates that AI agents adhere to the policies, procedures, and principles that govern your business, with clear reporting to governance committees.

Behavioural testing involves several approaches:

Policy adherence validation: Ensuring compliance with industry regulations, company guidelines, and ethical standards

Red team exercises: Security and domain experts actively attempting to make AI agents violate policies

Boundary testing: Validating that AI agents refuse inappropriate requests while remaining helpful

Communication standards testing: Verifying appropriate tone, language, and cultural sensitivity

Consistency checking: Ensuring AI agents provide aligned responses across similar scenarios

Governance alignment: Demonstrating that AI agent behaviour meets ethics, risk tolerance and other internal or external requirements

Best-practice frameworks provide standardised approaches for behavioural validation. Stanford's HELM Safety offers behavioural benchmarks, AIR-Bench tests across 314 risk categories aligned with government regulations, and RE-Bench evaluates real-world task performance[7]. This balance between capability and control, validated through continuous governance review, separates successful AI deployments from costly failures.

Layer 2: Security Testing - Protecting Against AI-Specific Threats

A systematic approach to security testing ensures AI agents operate safely even under sophisticated attack. Unlike traditional security, AI security must address threats that exploit how AI agents process information and make decisions.

Security testing must validate defences against multiple attack types:

Prompt injection attacks: Testing input sanitisation and instruction isolation mechanisms

Jailbreaking attempts: Validating resistance to role-playing and encoding bypass techniques

Data poisoning protection: Ensuring training and reference data integrity through checksums and validation

Waterhole attack prevention: Verifying AI agents don't reference or learn from malicious sources

Adversarial robustness: Testing with tools like Counterfit to identify manipulation vulnerabilities[11]

Penetration testing: Systematic attempts to breach AI agent defences using specialised tools

Security testing combines automated and manual approaches. For example, TextAttack automates adversarial testing by generating thousands of attack variations. Red teams bring human creativity to discover novel attack vectors. The key is implementing defence in depth - multiple protection layers that work together:

Input layer: Sanitisation and validation of all incoming requests

Processing layer: Behavioural monitoring for unusual patterns

Output layer: Filtering to prevent harmful content even if attacks succeed

Escalation layer: Human intervention pathways when confidence drops

Security testing helps ensure each layer functions correctly and that the overall system remains resilient even when individual defences are compromised. Regular security audits help keep defences current as new attack techniques emerge.

Layer 3: Compliance & Validation - Meeting Quality, Ethics and Regulatory Requirements

Organisations must ensure AI agents meet increasingly stringent regulatory, ethical, and quality requirements. This layer validates compliance with governance frameworks while ensuring AI agents operate fairly and transparently.

Compliance testing addresses multiple dimensions:

Bias detection: Using tools like AI Fairness 360 to identify discrimination patterns[8]

Transparency validation: Ensuring decisions can be explained as required by regulations

Data protection: Verifying compliance with GDPR, CCPA, and other privacy regulations

Audit trail completeness: Confirming all decisions are logged for regulatory review

Human oversight validation: Testing HITL/HOTL mechanisms activate appropriately

Regulatory alignment: Meeting specific requirements such as EU AI Act, sector regulations

Testing validates that AI agents provide fair treatment across demographics, generate required explanations for high-risk decisions, protect sensitive data throughout processing, maintain complete audit trails, escalate appropriately to human decision-makers, and meet all applicable regulatory standards. Contemporary tools make compliance testing systematic - Fairlearn enables intersectional bias analysis while specialised frameworks validate GDPR compliance. Compliance must be built in from the start, with regular audits ensuring AI agents remain aligned with evolving regulations.

Layer 4: System Orchestration - Validating Multi-Agent Collaboration

Modern production environments rarely deploy single AI agents working alone. Instead, organisations implement teams of specialised AI agents that collaborate to handle workflows. For example, one AI agent might gather customer information, another analyses it, a third generates recommendations, and a fourth handles follow-up. Microsoft Research demonstrates that these multi-agent systems significantly outperform single agents - but only when properly orchestrated[12].

Orchestration testing validates several critical aspects:

Communication protocol validation: Ensuring AI agents share information accurately without data loss

Failure isolation testing: Verifying the system continues operating when individual AI agents fail

Load distribution analysis: Confirming work allocates optimally across available AI agents

Context preservation: Maintaining conversation state across AI agent handoffs

Coordination testing: Preventing AI agents from creating conflicts or contradictory outputs

Integration validation: Ensuring seamless operation with CRM, ERP, and other systems

Frameworks such as Microsoft's AutoGen provide structured approaches to multi-agent testing, while LangChain enables validation of agent chains. CrewAI specifically focuses on team coordination patterns[12]. These tools help ensure your AI agent teams work together effectively.

Beyond agent-to-agent interaction, this layer validates integration with your existing technology landscape. AI agents must work seamlessly with databases, APIs, legacy systems, and human workflows. Testing verifies that data flows correctly, transactions complete reliably, and performance remains acceptable even as system complexity grows.

Layer 5: Scalability & Economics - Production Performance

AI agents that perform well with ten users in development can struggle with ten thousand in production. Response times become unacceptable under load, and costs can increase significantly when processing millions of queries. This layer ensures AI agents remain fast, reliable, and economically viable at any scale, with regular reporting to governance committees on cost efficiency.

Performance testing must address multiple dimensions simultaneously:

Latency testing: Measuring response times under realistic user volumes using tools such as JMeter or Locust

Load testing: Simulating production loads using synthetic data and mocking to validate capacity

Token consumption analysis: Understanding and optimising the text and data processing that drives costs

Model routing optimisation: Ensuring simple queries use cheaper models while critical problems engage more capable systems

Resource utilisation: Using monitoring tools like Prometheus to identify bottlenecks

Architecture optimisation: Designing efficient multi-agent orchestration that minimises redundant processing

Cost projection modelling: Predicting expenses at different usage levels

Successful organisations optimise AI agent architecture, coding and model selection carefully. LangChain's SmartLLMChain intelligently selects cost-effective models per query. Semantic caching reduces redundant processing. Batch processing handles non-real-time tasks efficiently. Scalability isn't just about handling more users - it's about maintaining quality and economics as you grow. Testing at this layer ensures sustainable AI deployment.

Layer 6: Value Realisation - Measuring Business Outcomes

Technical metrics like response time and accuracy are important, but they mean nothing if AI agents don't deliver real business value. This layer connects AI agent performance to commercial results, providing evidence that your AI investment generates genuine returns. Governance committees need clear metrics showing AI agents meet business objectives while managing risk appropriately.

Value measurement varies by domain but always ties to business fundamentals:

Customer Service: First-call resolution rates, satisfaction scores, average handle time, escalation frequency

Sales Operations: Lead conversion rates, deal velocity, pipeline accuracy, revenue per interaction

Financial Services: Transaction processing speed, error rates, compliance adherence, fraud detection accuracy

Healthcare: Diagnostic accuracy, patient satisfaction, clinical outcomes, documentation quality

Risk metrics: Incident rates, compliance violations, security breaches, reputation impacts

Value measurement tools make measurement systematic. For example, LangSmith enables custom business metrics tailored to your needs[5]. Evidently AI tracks production performance against business KPIs[13]. A/B testing frameworks compare AI agent performance against current approaches, providing empirical evidence of improvement.

The crucial point is that value measurement isn't a one-time validation but an ongoing process reported regularly to governance committees. Markets change, customer expectations evolve, and what delivers value today might not tomorrow. Continuous evaluation ensures AI agents remain aligned with business objectives while maintaining acceptable risk levels.

Continuous Monitoring: Sustaining Effectiveness

Unlike traditional software that remains stable once deployed, AI agents are dynamic systems whose performance inevitably changes over time. Market conditions shift, customer behaviour evolves, and regulations update. Without continuous monitoring, today's high-performing AI agent can become tomorrow's liability.

Monitoring must track multiple indicators with regular reporting to governance and risk committees:

Drift detection: Identifying when AI agent behaviour deviates from established baselines

Performance degradation alerts: Catching declining accuracy or increasing response times

Feedback loop integration: Incorporating user corrections to improve AI agent behaviour

Automated retraining triggers: Refreshing models when performance declines

Compliance monitoring: Ensuring ongoing adherence to regulatory requirements

Cost tracking: Preventing budget overruns through continuous expense monitoring

Governance reporting: Regular updates to risk and compliance committees

Current observability platforms designed for AI provide essential visibility - Weights & Biases offers ML observability, Datadog provides real-time performance monitoring, and WhyLabs focuses on data quality tracking. These tools help ensure problems are caught early when they're easy to fix.

Conclusion

This framework provides complete coverage for AI agent testing, addressing every critical risk while giving you confidence to deploy AI agents successfully. Each layer contributes essential validation, but the real power comes from how they work together, creating defence in depth that ensures AI agents deliver value safely and reliably.

Ready to ensure your AI agents deliver value?

Testing excellence separates AI agent success from expensive failure. Serpin combines deep AI agent expertise with proven evaluation methodologies to help you build confidence in your AI deployments.

[Start Your AI Agent Success Journey] - Contact Serpin to build your evaluation framework.

References

Fortune (2025). "MIT Report: 95% of Generative AI Pilots at Companies Failing." Reporting on MIT NANDA Initiative research. Available at: https://fortune.com/2025/08/18/mit-report-95-percent-generative-ai-pilots-at-companies-failing-cfo/

CBC News (2024). "Air Canada Must Honour Chatbot's Promise of Bereavement Discount." Reporting on Moffatt v. Air Canada tribunal decision. Available at: https://www.cbc.ca/news/canada/british-columbia/air-canada-chatbot-lawsuit-1.7116416

The Verge (2023). "CNET Found Errors in More Than Half of Its AI-Written Stories." Available at: https://www.theverge.com/2023/1/25/23571082/cnet-ai-tool-errors-corrections

TechCrunch (2025). "Apple Pauses AI Notification Summaries for News After Generating False Alerts." Available at: https://techcrunch.com/2025/01/16/apple-pauses-ai-notification-summaries

LangChain (2025). "LangSmith Documentation." Available at: https://www.langchain.com/langsmith

European Commission (2024). "EU AI Act Documentation." Available at: https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

Stanford HAI (2024). "HELM Safety: Towards Standardized Safety Evaluations of Language Models." Available at: https://crfm.stanford.edu/2024/11/08/helm-safety.html

IBM Research (2023). "AI Fairness 360 Documentation." Available at: https://research.ibm.com/blog/ai-fairness-360

Microsoft (2023). "Fairlearn Documentation." Available at: https://fairlearn.org/main/about/index.html

OWASP (2025). "Top 10 for Large Language Model Applications." Available at: https://owasp.org/www-project-top-10-for-large-language-model-applications/

Microsoft Security Blog (2021). "AI Security Risk Assessment Using Counterfit." Available at: https://www.microsoft.com/en-us/security/blog/2021/05/03/ai-security-risk-assessment-using-counterfit/

Microsoft Research (2024). "AutoGen: Multi-Agent Framework Documentation." Available at: https://www.microsoft.com/en-us/research/project/autogen/

Evidently AI (2024). "Model Monitoring Documentation." Available at: https://docs.evidentlyai.com/

© 2025 Serpin. Enterprise AI Implementation Partners.

www.serpin.ai | Building AI that works in production, not just in demos.

Category

Insights

Insights

Insights

Written by

Latest insights and trends

Let's have a conversation.

No pressure. No lengthy pitch deck. Just a straightforward discussion about where you are with AI and whether we can help.

If we're not the right fit, we'll tell you. If you're not ready, we'll say so. Better to find that out in a 30-minute call than after signing a contract.

Let's have a conversation.

No pressure. No lengthy pitch deck. Just a straightforward discussion about where you are with AI and whether we can help.

If we're not the right fit, we'll tell you. If you're not ready, we'll say so. Better to find that out in a 30-minute call than after signing a contract.

Let's have a conversation.

No pressure. No lengthy pitch deck. Just a straightforward discussion about where you are with AI and whether we can help.

If we're not the right fit, we'll tell you. If you're not ready, we'll say so. Better to find that out in a 30-minute call than after signing a contract.